What a good Power BI consulting engagement looks like

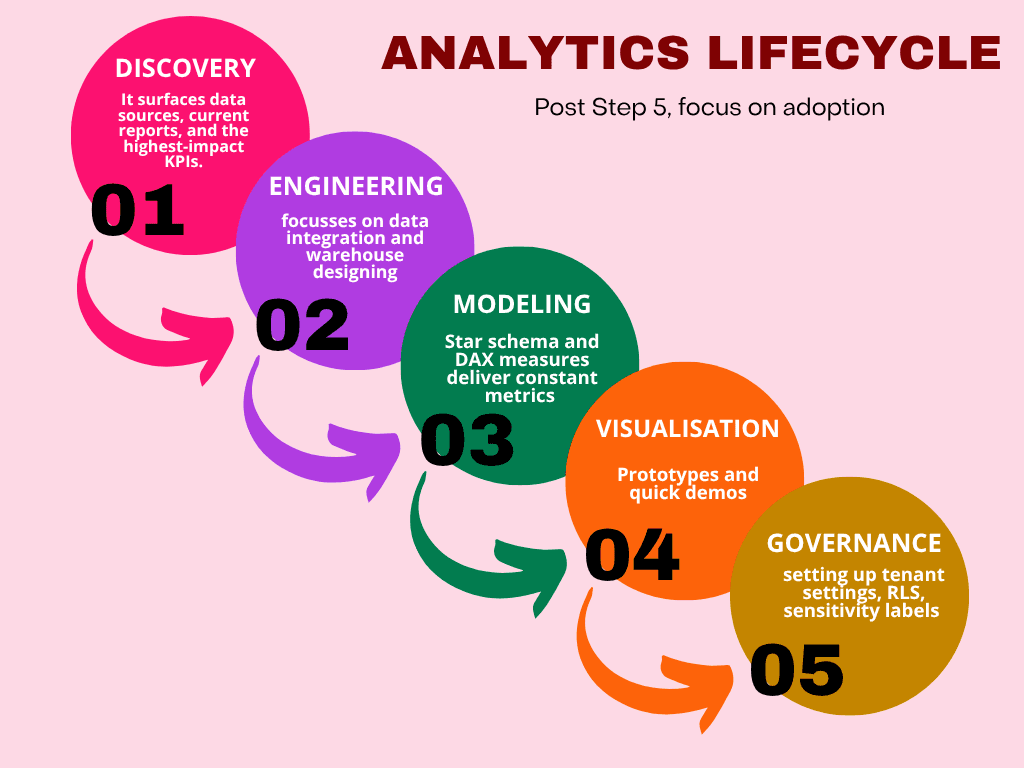

A mature engagement follows the analytics lifecycle: discovery → engineering → modeling → visualization → governance → adoption. Expect the provider to blend these skills, not just deliver a one-off report.

What to expect, in practice

Discovery & rapid audit. The first phase surfaces data sources, current reports, and the highest-impact KPIs. Deliverable: a short roadmap that prioritizes wins with clear metrics to validate success. (You’ll later plug these metrics into the ROI model to show payback.)

Data integration & warehouse design. Consultants should both recommend and implement a canonical data layer — ETL, staging, and an efficient schema that supports accurate attribution and time intelligence. This makes subsequent dashboards much cheaper to maintain.

Semantic modeling & reusable measures. A proper star schema and a library of DAX measures (not duplicated logic in PBIX files) delivers consistent metrics across reports. Look for a provider that promotes datasets rather than dozens of independent PBIX files.

UX-led dashboard development. Anticipate iterative prototypes and quick demos, with feedback cycles to point visuals towards actual decisions executives will actually make. Effective design accelerates adoption and reduces time-to-value.

Governance & operationalization. Consultants should assist in setting up tenant settings, row-level security (RLS), sensitivity labels, and publishing patterns — the functional guardrails that avoid sprawl and eroding trust.

Handover & CoE enablement. Beyond code, a strong engagement includes training, runbooks, and a Center of Excellence playbook so your team keeps improving dashboards after the consultants leave.

When these elements are combined, the consulting engagement acts like a multiplier — increasing adoption, improving data quality, and making it easier to capture ROI.

Engagement models — which fits your situation?

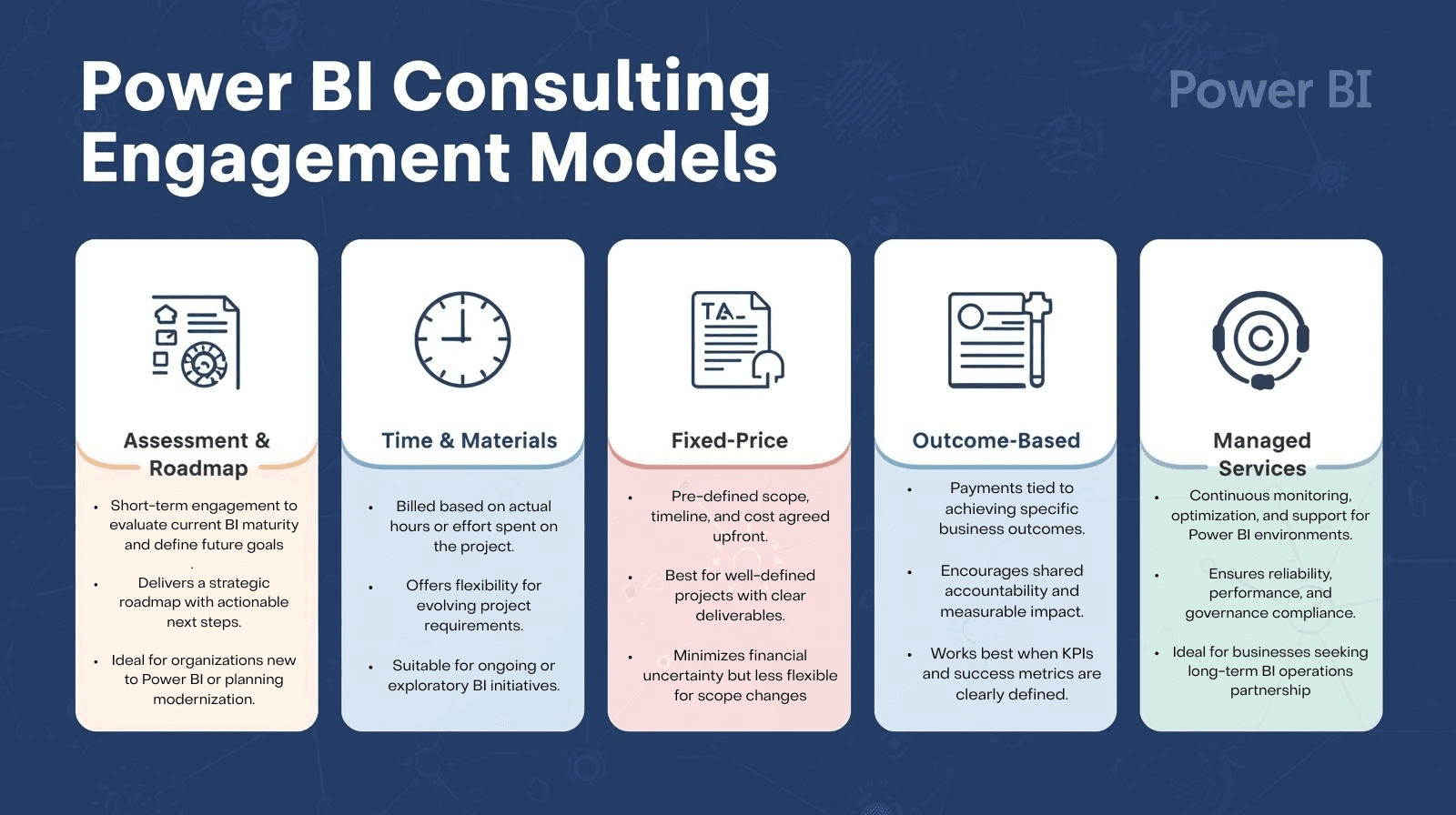

There’s no one-size-fits-all billing style. Pick a model that aligns with your clarity of scope and risk appetite:

Assessment & Roadmap (short fixed scope). A compact audit and roadmap that proves a partner understands your stack and gives a prioritized delivery plan. Good when execs need confidence before committing to build.

Time & Materials (T&M) with sprint cadence. Ideal for iterative work where scope evolves. Use milestone acceptance criteria (and weekly demos) to manage cost creep.

Fixed-price project. Works when scope is tightly defined and deliverables are clear (e.g., build 3 dashboards and a certified dataset). Be careful: add clear change-control clauses.

Outcome-based contracts. Pay tied to adoption or business outcomes (e.g., hours saved or CPA reductions). Attractive but requires rigorous measurement plans.

Managed services. Ongoing SLA for support, refresh monitoring, and enhancements — great once you move from pilot to production.

A common pattern that balances risk: run an initial 4–6 week assessment, then move into T&M sprints with clearly defined acceptance criteria tied to ROI and governance checkpoints.

Typical deliverables and a realistic timeline

Concrete deliverables you should require

Executive summary + prioritized roadmap with KPIs to validate impact.

Source-to-report lineage diagram and inventory of datasets.

A certified semantic dataset (star schema, documented measures).

2–4 production-ready dashboards (PBIX + published app) with test cases.

Governance guide: workspace templates, naming conventions, RLS mapping, sensitivity labels and DLP recommendations.

Training materials, runbooks, and a CoE handover plan.

Support plan & SLAs for refresh reliability and incident response.

Sample timeline for a small pilot (8–12 weeks)

Week 0–1: Kickoff & stakeholder interviews.

Week 2: Audit & quick-win delivery (one automated report).

Week 3–5: Data modeling and ETL work; iterate on data contracts.

Week 6–9: Dashboard builds and user UAT sessions.

Week 10–12: Publish, schedule refresh, enable RLS, and run training.

Larger programs- with Fabric/platform integration, analytics embedded within apps, or enterprise governance will take 3–9 months based on integrations and regulatory depth.

Pricing & cost drivers (ballpark figures)

Pricing varies by region and depth, but expect rough ranges:

Assessment & roadmap: $5k–$30k

Pilot (model + 1–3 dashboards): $20k–$60k

Full implementation (mid-size org): $60k–$250k

Enterprise program (CoE + embedding): $250k+

Managed services: $2k–$15k/month

What drives price

Number and complexity of data sources (legacy systems are expensive).

Volume and cardinality of datasets (high-cardinality dimensions require aggregations).

Embedding needs and multi-tenant isolation.

Governance & compliance (CMK, data residency).

AI and LLM usage (Copilot integrations add API spend).

Documentation, testing, and training depth.

A transparent proposal will break these drivers out. If it doesn’t, ask the vendor to show unit-costs (hours per role) so you can compare apples to apples.

How to evaluate proposals

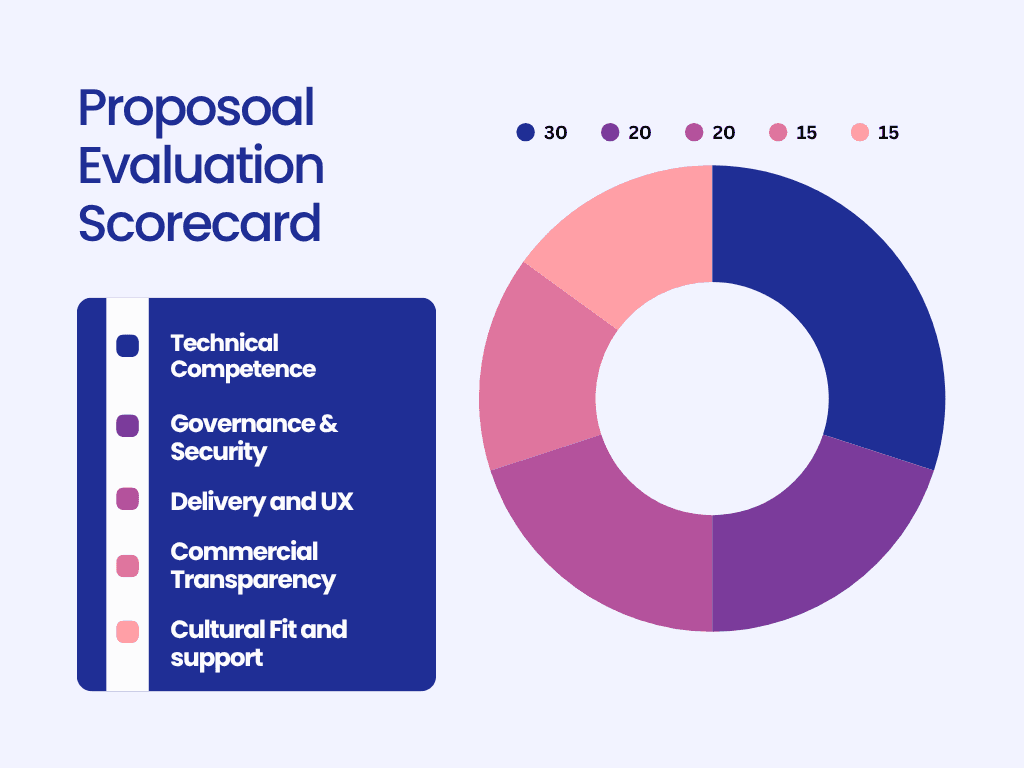

When reviewing finalists, score along these dimensions:

Technical competence (30%). Data engineering, ETL patterns, and semantic modeling experience. Ask for anonymized sample models.

Governance & security (20%). RLS, sensitivity labeling, DLP, and tenant admin experience. Can they implement a production-ready CoE?

Delivery & UX (20%). Iterative demos, prototyping discipline, and emphasis on adoption. Do they test with actual users?

Commercial transparency (15%). Clear milestones, change-control process, and licensing/TCO guidance.

Cultural fit & support model (15%). Communication, time zone overlap, and post-launch support plans.

Need references and ask to talk to customers with similar size and regulatory requirements. If they're resistant to providing samples of models or cleansed PBIXs, consider it a red flag.

Red flags and what to avoid

Deliverables without handover. If the proposal ends at “dashboards delivered” without training, runbooks, or dataset certification, the value will degrade fast.

Lack of governance focus. If RLS, sensitivity labels and automation aren’t on their checklist, you’ll pay in rework and risk.

Too-good-to-be-true fixed bids. Unrealistic timelines for vague scope or guaranteed ROI with no pilot data are suspect.

No evidence of reuse. Providers that always start from scratch (no templates, no semantic libraries) will be slower and costlier.

Opaque pricing. Vendors that won’t show hours per role or hide embedding and cloud costs will surprise you later.

The right Power BI consulting partner shortens time-to-value by combining strong data engineering, reusable semantic modeling, UX-driven dashboards, and production-grade governance. Insist on measurable deliverables, reusable assets, and a handover plan that turns a pilot into a repeatable program. When proposals explicitly map to ROI, governance and adoption metrics, you’ll reduce procurement risk and accelerate real impact — from faster decisions to lower ad waste and stronger business outcomes.